Introduction

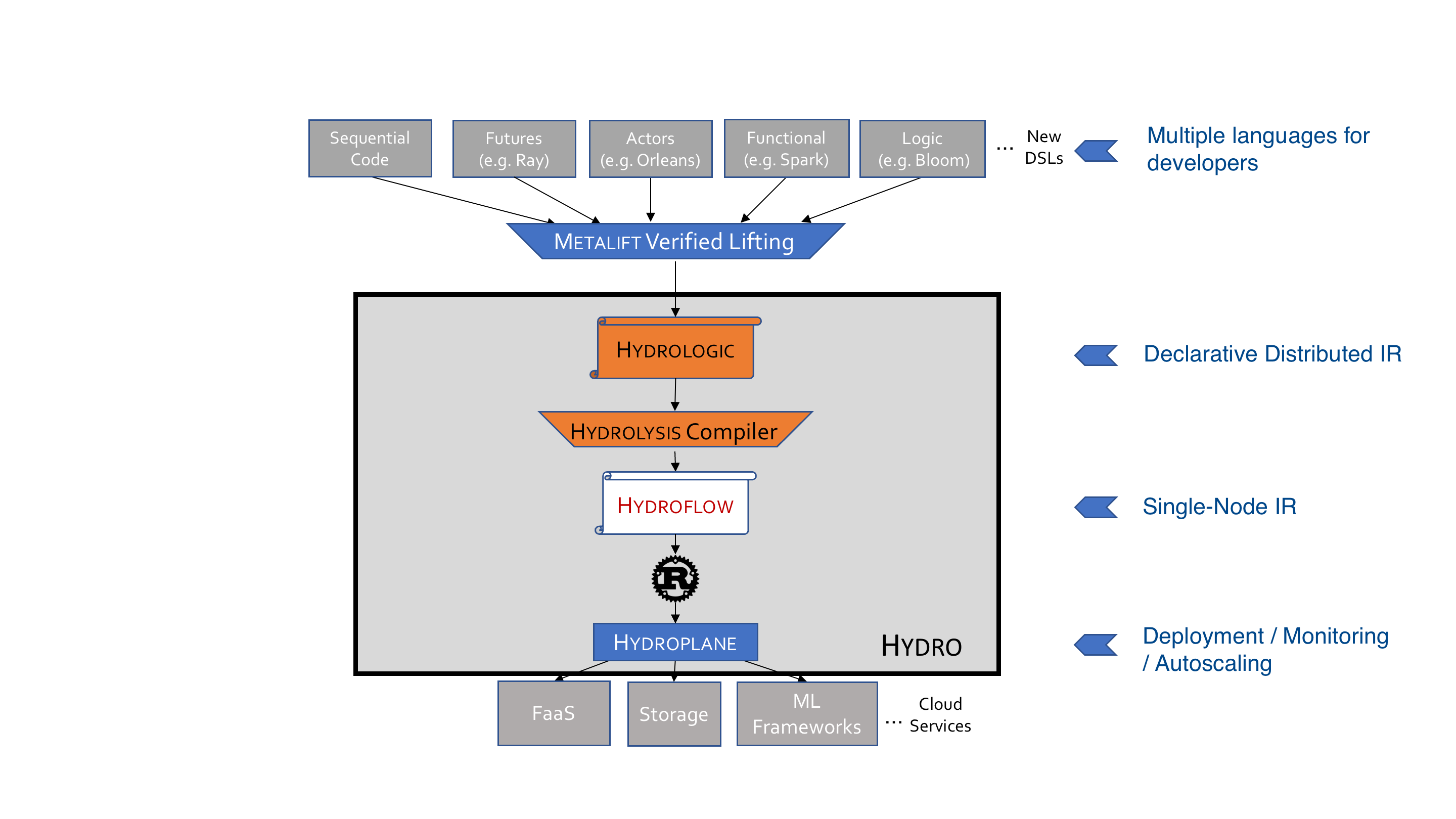

Hydroflow is a compiler for low-latency dataflow programs, written in Rust. Hydroflow is the runtime library for the Hydro language stack, which is under development as a complete compiler stack for distributed programming languages.

Hydroflow is designed with two goals in mind:

- Expert developers can program Hydroflow directly to build components in a distributed system.

- Higher levels of the Hydro stack will offer friendlier languages with more abstractions, and treat Hydroflow as a compiler target.

Hydroflow provides a DSL—a surface syntax—embedded in Rust, which compiles to high-efficiency machine code. As the lowest level of the Hydro stack, Hydroflow requires some knowledge of Rust to use.

This Book

This book will teach you how to set up your environment to get started with Hydroflow, and how to program in the Hydroflow surface syntax.

Keep in mind that Hydroflow is under active development and is constantly changing. However the code in this book is tested with the Hydroflow library so should always be up-to-date.

If you have any questions, feel free to create an issue on Github.

Quickstart

This section will get you up and running with Rust and Hydroflow, and work you through a set of concrete examples illustrating much of what you need to know to get productive with Hydroflow.

Setup

This section explains how to get Hydroflow running, either for development or usage, even if you are not familiar with Rust development.

Installing Rust

First you will need to install Rust. We recommend the conventional installation

method, rustup, which allows you to easily manage and update Rust versions.

The link in the previous line will take you to the Rust website that shows you how to

install rustup and the Rust package manager cargo (and the

internally-used rustc compiler). cargo is Rust's main development tool,

used for building, running, and testing Rust code.

The following cargo commands will come in handy:

cargo check --all-targets- Checks the workspace for any compile-time errors.cargo build --all-targets- Builds all projects/tests/benchmarks/examples in the workspace.cargo clean- Cleans the build cache, sometimes needed if the build is acting up.cargo test- Runs tests in the workspace.cargo run -p hydroflow --example <example name>- Run an example program inhydroflow/examples.

To learn Rust see the official Learn Rust page. Here are some good resources:

- The Rust Programming Language, AKA "The Book"

- Learn Rust With Entirely Too Many Linked Lists is a good way to learn Rust's ownership system and its implications.

In this book we will be using the Hydroflow template generator, which we recommend

as a starting point for your Hydroflow projects. For this purpose you

will need to install the cargo-generate tool:

cargo install cargo-generate

VS Code Setup

We recommend using VS Code with the rust-analyzer extension (and NOT the

Rust extension). To enable the pre-release version of rust-analyzer

(required by some new nightly syntax we use, at least until 2022-03-14), click

the "Switch to Pre-Release Version" button next to the uninstall button.

Setting up a Hydroflow Project

The easiest way to get started with Hydroflow is to begin with a template project. Create a directory where you'd like to put that project, direct your terminal there and run:

cargo generate hydro-project/hydroflow-template

You will be prompted to name your project. The cargo generate command will create a subdirectory

with the relevant files and folders.

As part of generating the project, the hydroflow library will be downloaded as a dependency.

You can then open the project in VS Code or IDE of your choice, or

you can simply build the template project with cargo build.

cd <project name>

cargo build

This should return successfully.

The template provides a simple working example of a Hydroflow program. As a sort of "hello, world" of distributed systems, it implements an "echo server" that simply echoes back the messages you sent it; it also implements a client to test the server. We will replace the code in that example with our own, but it's a good idea to run it first to make sure everything is working.

We call a running Hydroflow binary a transducer.

Start by running a transducer for the server:

% cargo run -- --role server

Listening on 127.0.0.1:<port>

Server live!

Take note of the server's port number, and in a separate terminal, start a client transducer:

% cd <project name>

% cargo run -- --role client --server-addr 127.0.0.1:<port>

Listening on 127.0.0.1:<client_port>

Connecting to server at 127.0.0.1:<port>

Client live!

Now you can type strings in the client, which are sent to the server, echo'ed back, and printed at the client. E.g.:

Hello!

2022-12-20 18:51:50.181647 UTC: Got Echo { payload: "Hello!", ts: 2022-12-20T18:51:50.180874Z } from 127.0.0.1:61065

Alternative: Checking out the Hydroflow Repository

This book will assume you are using the template project, but some Rust experts may want to get started with Hydroflow by cloning and working in the repository directly. You should fork the repository if you want to push your changes.

To clone the repo, run:

git clone git@github.com:hydro-project/hydroflow.git

Hydroflow requires nightly Rust, but the repo is already configured for it via

rust-toolchain.toml.

You can then open the repo in VS Code or IDE of your choice. In VS Code, rust-analyzer

will provide inline type and error messages, code completion, etc.

To work with the repository, it's best to start with an "example", found in the

hydroflow/examples folder.

These examples are included via the hydroflow/Cargo.toml file,

so make sure to add your example there if you create a new one. The simplest

example is the echo server.

The Hydroflow repository is set up as a workspace,

i.e. a repo containing a bunch of separate packages, hydroflow is just the

main one. So if you want to work in a proper separate cargo package, you can

create one and add it into the root Cargo.toml,

much like the provided template.

Simplest Example

In this example we will cover:

- Modifying the Hydroflow template project

- How Hydroflow program specs are embedded inside Rust

- How to execute a simple Hydroflow program

- Two Hydroflow operators:

source_iterandfor_each

Lets start out with the simplest possible Hydroflow program, which prints out

the numbers in 0..10.

Create a clean template project:

% cargo generate hydro-project/hydroflow-template

⚠️ Favorite `hydro-project/hydroflow-template` not found in config, using it as a git repository: https://github.com/hydro-project/hydroflow-template.git

🤷 Project Name: simple

🔧 Destination: /Users/jmh/code/sussudio/simple ...

🔧 project-name: simple ...

🔧 Generating template ...

[ 1/11] Done: .gitignore [ 2/11] Done: Cargo.lock [ 3/11] Done: Cargo.toml [ 4/11] Done: README.md [ 5/11] Done: rust-toolchain.toml [ 6/11] Done: src/client.rs [ 7/11] Done: src/helpers.rs [ 8/11] Done: src/main.rs [ 9/11] Done: src/protocol.rs [10/11] Done: src/server.rs [11/11] Done: src 🔧 Moving generated files into: `<dir>/simple`...

💡 Initializing a fresh Git repository

✨ Done! New project created <dir>/simple

Change directory into the resulting simple folder or open it in your IDE. Then edit the src/main.rs file, replacing

all of its contents with the following code:

use hydroflow::hydroflow_syntax; pub fn main() { let mut flow = hydroflow_syntax! { source_iter(0..10) -> for_each(|n| println!("Hello {}", n)); }; flow.run_available(); }

And then run the program:

% cargo run

Hello 0

Hello 1

Hello 2

Hello 3

Hello 4

Hello 5

Hello 6

Hello 7

Hello 8

Hello 9

Understanding the Code

Although this is a trivial program, it's useful to go through it line by line.

use hydroflow::hydroflow_syntax;This import gives you everything you need from Hydroflow to write code with Hydroflow's surface syntax.

Next, inside the main method we specify a flow by calling the

hydroflow_syntax! macro. We assign the resulting Hydroflow instance to

a mutable variable flow––mutable because we will be changing its status when we run it.

use hydroflow::hydroflow_syntax;

pub fn main() {

let mut flow = hydroflow_syntax! {

source_iter(0..10) -> for_each(|n| println!("Hello {}", n));

};Hydroflow surface syntax defines a "flow" consisting of operators connected via -> arrows.

This simplest example uses a simple two-step linear flow.

It starts with a source_iter operator that takes the Rust

iterator 0..10 and iterates it to emit the

numbers 0 through 9. That operator then passes those numbers along the -> arrow downstream to a

for_each operator that invokes its closure argument to print each

item passed in.

The Hydroflow surface syntax is merely a specification; it does not actually do anything

until we run it.

We run the flow from within Rust via the run_available() method.

flow.run_available();Note that run_available() runs the Hydroflow graph until no more work is immediately

available. In this example flow, running the graph drains the iterator completely, so no

more work will ever be available. In future examples we will use external inputs such as

network ingress, in which case more work might appear at any time.

A Note on Project Structure

The template project is intended to be a starting point for your own Hydroflow project, and you can add files and directories as you see fit. The only requirement is that the src/main.rs file exists and contains a main() function.

In this simplest example we did not use a number of the files in the template: notably everything in the src/ subdirectory other than src/main.rs. If you'd like to delete those extraneous files you can do so, but it's not necessary, and we'll use them in subsequent examples.

Simple Example

In this example we will cover some additional standard Hydroflow operators:

Lets build on the simplest example to explore some of the operators available

in Hydroflow. You may be familiar with operators such as map(...),

filter(...), flatten(...),

etc. from Rust iterators or from other programming languages, and these are

also available in Hydroflow.

In your simple project, replace the contents of src/main.rs with the following:

use hydroflow::hydroflow_syntax; pub fn main() { let mut flow = hydroflow_syntax! { source_iter(0..10) -> map(|n| n * n) -> filter(|&n| n > 10) -> map(|n| (n..=n+1)) -> flatten() -> for_each(|n| println!("Howdy {}", n)); }; flow.run_available(); }

Let's take this one operator at a time, starting after the source_iter operator we saw in the previous example.

-

-> map(|n| n * n)transforms each element individually as it flows through the subgraph. In this case, we square each number. -

Next,

-> filter(|&n| n > 10)only passes along squared numbers that are greater than 10. -

The subsequent

-> map(|n| (n..=n+1))uses standard Rust syntax to convert each numberninto aRangeInclusive[n,n+1]. -

The

-> flatten()operator converts the ranges back into a stream of the individual numbers which they contain. -

Finally we use the now-familiar

for_eachoperator to print each number.

Now let's run the program:

% cargo run

<build output>

Howdy 16

Howdy 17

Howdy 25

Howdy 26

Howdy 36

Howdy 37

Howdy 49

Howdy 50

Howdy 64

Howdy 65

Howdy 81

Howdy 82

Rewriting with Composite Operators

We can also express the same program with more aggressive use of composite operators like

filter_map() and flat_map(). Hydroflow will compile these down to the same

machine code.

Replace the contents of src/main.rs with the following:

use hydroflow::hydroflow_syntax; pub fn main() { let mut flow = hydroflow_syntax! { source_iter(0..10) -> filter_map(|n| { let n2 = n * n; if n2 > 10 { Some(n2) } else { None } }) -> flat_map(|n| (n..=n+1)) -> for_each(|n| println!("G'day {}", n)); }; flow.run_available(); }

Results:

% cargo run

<build output>

G'day 16

G'day 17

G'day 25

G'day 26

G'day 36

G'day 37

G'day 49

G'day 50

G'day 64

G'day 65

G'day 81

G'day 82

An Example With Streaming Input

In this example we will cover:

- the input

channelconcept, which streams data in from outside the Hydroflow spec- the

source_streamoperator that brings channel input into Hydroflow- Rust syntax to programmatically send data to a (local) channel

In our previous examples, data came from within the Hydroflow spec, via Rust iterators and the source_iter operator. In most cases, however, data comes from outside the Hydroflow spec. In this example, we'll see a simple version of this idea, with data being generated on the same machine and sent into the channel programmatically via Rust.

For discussion, we start with a skeleton much like before:

use hydroflow::hydroflow_syntax; pub fn main() { let mut hydroflow = hydroflow_syntax! { // code will go here }; hydroflow.run_available(); }

TODO: Make the following less intimidating to users who are not Tokio experts.

To add a new external input

channel, we can use the hydroflow::util::unbounded_channel() function in Rust before we declare the Hydroflow spec:

let (input_example, example_recv) = hydroflow::util::unbounded_channel::<usize>();Under the covers, this is a multiple-producer/single-consumer (mpsc) channel provided by Rust's tokio library, which is usually the appropriate choice for an inbound Hydroflow stream.

Think of it as a high-performance "mailbox" that any sender can fill with well-typed data.

The Rust ::<usize> syntax uses what is affectionately

called the "turbofish", which is how type parameters (generic arguments) are

supplied to generic types and functions. In this case it specifies that this tokio channel

transmits items of type usize.

The returned example_recv value can be used via a source_stream

to build a Hydroflow subgraph just like before.

Here is the same program as before, but using the

input channel. Back in the simple project, replace the contents of src/main.rs with the following:

use hydroflow::hydroflow_syntax; pub fn main() { // Create our channel input let (input_example, example_recv) = hydroflow::util::unbounded_channel::<usize>(); let mut flow = hydroflow_syntax! { source_stream(example_recv) -> filter_map(|n: usize| { let n2 = n * n; if n2 > 10 { Some(n2) } else { None } }) -> flat_map(|n| (n..=n+1)) -> for_each(|n| println!("Ahoy, {}", n)); }; println!("A"); input_example.send(1).unwrap(); input_example.send(0).unwrap(); input_example.send(2).unwrap(); input_example.send(3).unwrap(); input_example.send(4).unwrap(); input_example.send(5).unwrap(); flow.run_available(); println!("B"); input_example.send(6).unwrap(); input_example.send(7).unwrap(); input_example.send(8).unwrap(); input_example.send(9).unwrap(); flow.run_available(); }

% cargo run

<build output>

A

Ahoy, 16

Ahoy, 17

Ahoy, 25

Ahoy, 26

B

Ahoy, 36

Ahoy, 37

Ahoy, 49

Ahoy, 50

Ahoy, 64

Ahoy, 65

Ahoy, 81

Ahoy, 82

At the bottom of main.rs we can see how to programatically supply usize-typed inputs with the tokio

.send() method. We call Rust's .unwrap() method to ignore the error messages from

.send() in this simple case. In later examples we'll see how to

allow for data coming in over a network.

Graph Neighbors

In this example we cover:

- Assigning sub-flows to variables

- Our first multi-input operator,

join- Indexing multi-input operators by prepending a bracket expression

- The

uniqueoperator for removing duplicates from a stream- Visualizing hydroflow code via

flow.meta_graph().to_mermaid()- A first exposure to the concepts of strata and ticks

So far all the operators we've used have one input and one output and therefore create a linear flow of operators. Let's now take a look at a Hydroflow program containing an operator which has multiple inputs; in the following examples we'll extend this to multiple outputs.

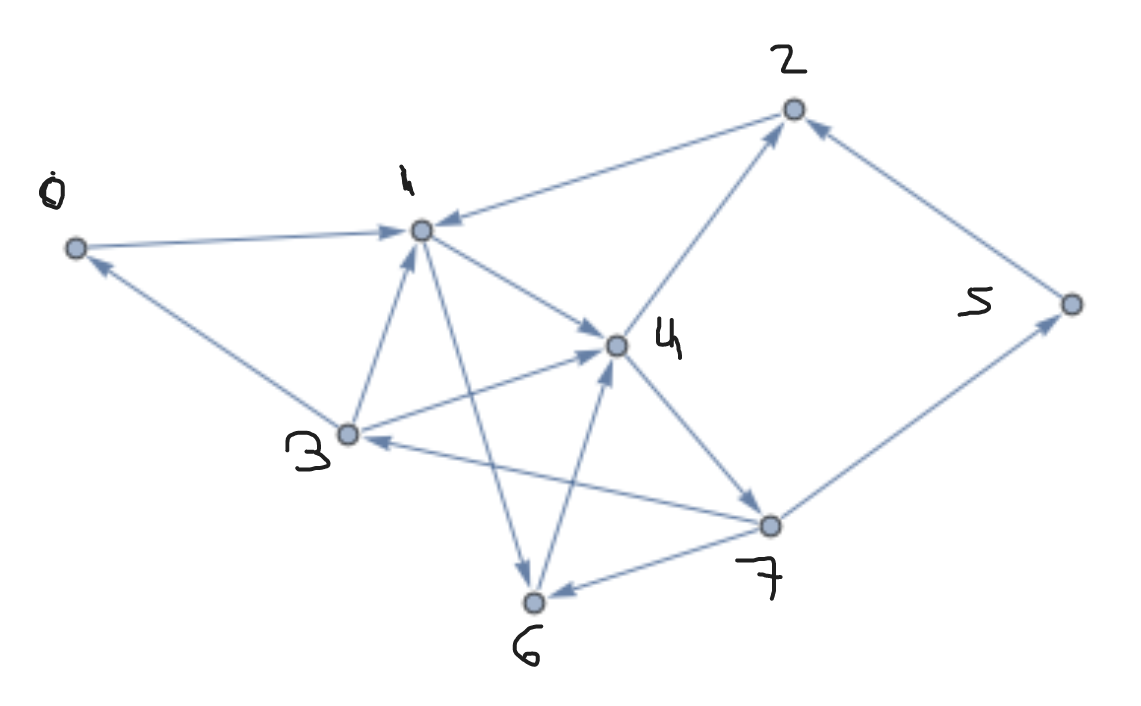

To motivate this, we are going to start out on a little project of building a flow-based algorithm

for the problem of graph reachability.

Given an abstract graph—represented as data in the form of a streaming list of edges—which

vertices can be reached from a vertex passed in as the origin? It turns out this is fairly

naturally represented as a dataflow program.

Note on terminology: In each of the next few examples, we're going to write a Hydroflow program (a dataflow graph) to process data that itself represents some other graph! To avoid confusion, in these examples, we'll refer to the Hydroflow program as a "flow" or "program", and the data as a "graph" of "edges" and "vertices".

But First: Graph Neighbors

Graph reachability exercises a bunch of concepts at once, so we'll start here with a simpler flow that finds graph neighbors: vertices that are just one hop away.

Our graph neighbors Hydroflow program will take

our initial origin vertex as one input, and join it another input that streams in all the edges—this

join will stream out the vertices that are one hop (edge) away from the starting vertex.

Here is an intuitive diagram of that dataflow program (we'll see complete, autogenerated Hydroflow diagrams below):

graph TD

subgraph sources

01[Stream of Edges]

end

subgraph neighbors of origin

00[Origin Vertex]

20("V ⨝ E")

40[Output]

00 --> 20

01 ---> 20

20 --> 40

end

Lets take a look at some Hydroflow code that implements the program. In your simple project,

replace the contents of src/main.rs with the following:

use hydroflow::hydroflow_syntax; pub fn main() { // An edge in the input data = a pair of `usize` vertex IDs. let (edges_send, edges_recv) = hydroflow::util::unbounded_channel::<(usize, usize)>(); let mut flow = hydroflow_syntax! { // inputs: the origin vertex (vertex 0) and stream of input edges origin = source_iter(vec![0]); stream_of_edges = source_stream(edges_recv); // the join my_join = join() -> flat_map(|(src, (_, dst))| [src, dst]); origin -> map(|v| (v, ())) -> [0]my_join; stream_of_edges -> [1]my_join; // the output my_join -> unique() -> for_each(|n| println!("Reached: {}", n)); }; println!( "{}", flow.meta_graph() .expect("No graph found, maybe failed to parse.") .to_mermaid() ); edges_send.send((0, 1)).unwrap(); edges_send.send((2, 4)).unwrap(); edges_send.send((3, 4)).unwrap(); edges_send.send((1, 2)).unwrap(); edges_send.send((0, 3)).unwrap(); edges_send.send((0, 3)).unwrap(); flow.run_available(); }

Run the program and focus on the last three lines of output, which come from flow.run_available():

% cargo run

<build output>

<graph output>

Reached: 0

Reached: 3

Reached: 1

That looks right: the edges we "sent" into the flow that start at 0 are

(0, 1) and (0, 3), so the nodes reachable from 0 in 0 or 1 hops are 0, 1, 3.

Note: When you run the program you may see the lines printed out in a different order. That's OK; the flow we're defining here is producing a

setof nodes, so the order in which they are printed out is not specified. Thesort_byoperator can be used to sort the output of a flow.

Examining the Hydroflow Code

In the code, we want to start out with the origin vertex, 0,

and the stream of edges coming in. Because this flow is a bit more complex

than our earlier examples, we break it down into named "subflows", assigning them variable

names that we can reuse. Here we specify two subflows, origin and stream_of_edges:

origin = source_iter(vec![0]);

stream_of_edges = source_stream(edges_recv);The Rust syntax vec![0] constructs a vector with a single element, 0, which we iterate

over using source_iter.

We then set up a join() that we

name my_join, which acts like a SQL inner join.

// the join

my_join = join() -> flat_map(|(src, (_, dst))| [src, dst]);

origin -> map(|v| (v, ())) -> [0]my_join;

stream_of_edges -> [1]my_join;First, note the syntax for passing data into a subflow with multiple inputs requires us to prepend

an input index (starting at 0) in square brackets to the multi-input variable name or operator. In this example we have -> [0]my_join

and -> [1]my_join.

Hydroflow's join() API requires

a little massaging of its inputs to work properly.

The inputs must be of the form of a pair of elements (K, V1)

and (K, V2), and the operator joins them on equal keys K and produces an

output of (K, (V1, V2)) elements. In this case we only want to join on the key v and

don't have any corresponding value, so we feed origin through a map()

to generate (v, ()) elements as the first join input.

The stream_of_edges are (src, dst) pairs,

so the join's output is (src, ((), dst)) where dst are new neighbor

vertices. So the my_join variable feeds the output of the join through a flat_map to extract the pairs into 2-item arrays, which are flattened to give us a list of all vertices reached.

Finally we print the neighbor vertices as follows:

my_join -> unique() -> for_each(|n| println!("Reached: {}", n));The unique operator removes duplicates from the stream to make things more readable. Note that unique does not run in a streaming fashion, which we will talk about more below.

There's

also some extra code here, flow.meta_graph().expect(...).to_mermaid(), which tells

Hydroflow to

generate a diagram rendered by Mermaid showing

the structure of the graph, and print it to stdout. You can copy that text and paste it into the Mermaid Live Editor to see the graph, which should look as follows:

%%{init: {'theme': 'base', 'themeVariables': {'clusterBkg':'#ddd'}}}%%

flowchart TD

classDef pullClass fill:#02f,color:#fff,stroke:#000

classDef pushClass fill:#ff0,stroke:#000

linkStyle default stroke:#aaa,stroke-width:4px,color:red,font-size:1.5em;

subgraph "sg_1v1 stratum 0"

1v1[\"(1v1) <tt>source_iter(vec! [0])</tt>"/]:::pullClass

2v1[\"(2v1) <tt>source_stream(edges_recv)</tt>"/]:::pullClass

5v1[\"(5v1) <tt>map(| v | (v, ()))</tt>"/]:::pullClass

3v1[\"(3v1) <tt>join()</tt>"/]:::pullClass

4v1[\"(4v1) <tt>flat_map(| (src, (_, dst)) | [src, dst])</tt>"/]:::pullClass

1v1--->5v1

2v1--1--->3v1

5v1--0--->3v1

3v1--->4v1

end

subgraph "sg_2v1 stratum 1"

6v1[/"(6v1) <tt>unique()</tt>"\]:::pushClass

7v1[/"(7v1) <tt>for_each(| n | println! ("Reached: {}", n))</tt>"\]:::pushClass

6v1--->7v1

end

4v1--->8v1

8v1["(8v1) <tt>handoff</tt>"]:::otherClass

8v1===o6v1

Notice in the mermaid graph how Hydroflow separates the unique operator and its downstream dependencies into their own

stratum (plural: strata). Note also the edge coming into unique is bold and ends in a ball: this is because the input to unique is

``blocking'', meaning that unique should not run until all of the input on that edge has been received.

The stratum boundary before unique ensures that the blocking property is respected.

You may also be wondering why the nodes in the graph have different colors (and shapes, for readers who cannot distinguish

colors easily). The answer has nothing to do with the meaning of the program, only with the way that Hydroflow compiles

operators into Rust. Simply put, blue (wide-topped) boxes pull data, yellow (wide-bottomed) boxes push data, and the handoff is a special operator that buffers pushed data for subsequent pulling. Hydroflow always places a handoff

between a push producer and a pull consumer, for reasons explained in the Architecture chapter.

Strata and Ticks

Hydroflow runs each stratum in order, one at a time, ensuring all values are computed before moving on to the next stratum. Between strata we see a handoff, which logically buffers the output of the first stratum, and delineates the separation of execution between the 2 strata.

After all strata are run, Hydroflow returns to the first stratum; this begins the next tick. This doesn't really matter for this example, but it is important for long-running Hydroflow services that accept input from the outside world. More on this topic in the chapter on time.

Returning to the code, if you read the edges_send calls carefully, you'll see that the example data

has vertices (2, 4) that are more than one hop away from 0, which were

not output by our simple program. To extend this example to graph reachability,

we need to recurse: find neighbors of our neighbors, neighbors of our neighbors' neighbors, and so on. In Hydroflow,

this is done by adding a loop to the flow, as we'll see in our next example.

Graph Reachability

In this example we cover:

- Implementing a recursive algorithm (graph reachability) via cyclic dataflow

- Operators to merge data from multiple inputs (

merge), and send data to multiple outputs (tee)- Indexing multi-output operators by appending a bracket expression

- An example of how a cyclic dataflow in one stratum executes to completion before starting the next stratum.

To expand from graph neighbors to graph reachability, we want to find vertices that are connected not just to origin,

but also to vertices reachable transitively from origin. Said differently, a vertex is reachable from origin if it is

one of two cases:

- a neighbor of

originor - a neighbor of some other vertex that is itself reachable from

origin.

It turns out this is a very small change to our Hydroflow program! Essentially we want to take all the reached vertices we found in our graph neighbors program,

and treat them recursively just as we treated origin.

To do this in a language like Hydroflow, we introduce a cycle in the flow:

we take the join output and have it

flow back into the join input. The modified intuitive graph looks like this:

graph TD

subgraph sources

01[Stream of Edges]

end

subgraph reachable from origin

00[Origin Vertex]

10[Reached Vertices]

20("V ⨝ E")

40[Output]

00 --> 10

10 --> 20

20 --> 10

01 --> 20

20 --> 40

end

Note that we added a Reached Vertices box to the diagram to merge the two inbound edges corresponding to our

two cases above. Similarly note that the join box V ⨝ E now has two outbound edges; the sketch omits the operator

to copy ("tee") the output along

two paths.

Now lets look at a modified version of our graph neighbor code that implements this full program, including the loop as well as the Hydroflow merge and tee.

Modify src/main.rs to look like this:

use hydroflow::hydroflow_syntax; pub fn main() { // An edge in the input data = a pair of `usize` vertex IDs. let (edges_send, edges_recv) = hydroflow::util::unbounded_channel::<(usize, usize)>(); let mut flow = hydroflow_syntax! { // inputs: the origin vertex (vertex 0) and stream of input edges origin = source_iter(vec![0]); stream_of_edges = source_stream(edges_recv); reached_vertices = merge(); origin -> [0]reached_vertices; // the join my_join_tee = join() -> flat_map(|(src, ((), dst))| [src, dst]) -> tee(); reached_vertices -> map(|v| (v, ())) -> [0]my_join_tee; stream_of_edges -> [1]my_join_tee; // the loop and the output my_join_tee[0] -> [1]reached_vertices; my_join_tee[1] -> unique() -> for_each(|x| println!("Reached: {}", x)); }; println!( "{}", flow.meta_graph() .expect("No graph found, maybe failed to parse.") .to_mermaid() ); edges_send.send((0, 1)).unwrap(); edges_send.send((2, 4)).unwrap(); edges_send.send((3, 4)).unwrap(); edges_send.send((1, 2)).unwrap(); edges_send.send((0, 3)).unwrap(); edges_send.send((0, 3)).unwrap(); flow.run_available(); }

And now we get the full set of vertices reachable from 0:

% cargo run

<build output>

<graph output>

Reached: 3

Reached: 0

Reached: 2

Reached: 4

Reached: 1

Examining the Hydroflow Code

Let's review the significant changes here. First, in setting up the inputs we have the

addition of the reached_vertices variable, which uses the merge()

op to merge the output of two operators into one.

We route the origin vertex into it as one input right away:

reached_vertices = merge();

origin -> [0]reached_vertices;Note the square-bracket syntax for differentiating the multiple inputs to merge()

is the same as that of join() (except that merge can have an unbounded number of inputs,

whereas join() is defined to only have two.)

Now, join() is defined to only have one output. In our program, we want to copy

the joined output

output to two places: to the original for_each from above to print output, and also

back to the merge operator we called reached_vertices.

We feed the join() output

through a flat_map() as before, and then we feed the result into a tee() operator,

which is the mirror image of merge(): instead of merging many inputs to one output,

it copies one input to many different outputs. Each input element is cloned, in Rust terms, and

given to each of the outputs. The syntax for the outputs of tee() mirrors that of merge: we append

an output index in square brackets to the tee or variable. In this example we have

my_join_tee[0] -> and my_join_tee[1] ->.

Finally, we process the output of the join as passed through the tee.

One branch pushes reached vertices back up into the reached_vertices variable (which begins with a merge), while the other

prints out all the reached vertices as in the simple program.

my_join_tee[0] -> [1]reached_vertices;

my_join_tee[1] -> for_each(|x| println!("Reached: {}", x));Note the syntax for differentiating the outputs of a tee() is symmetric to that of merge(),

showing up to the right of the variable rather than the left.

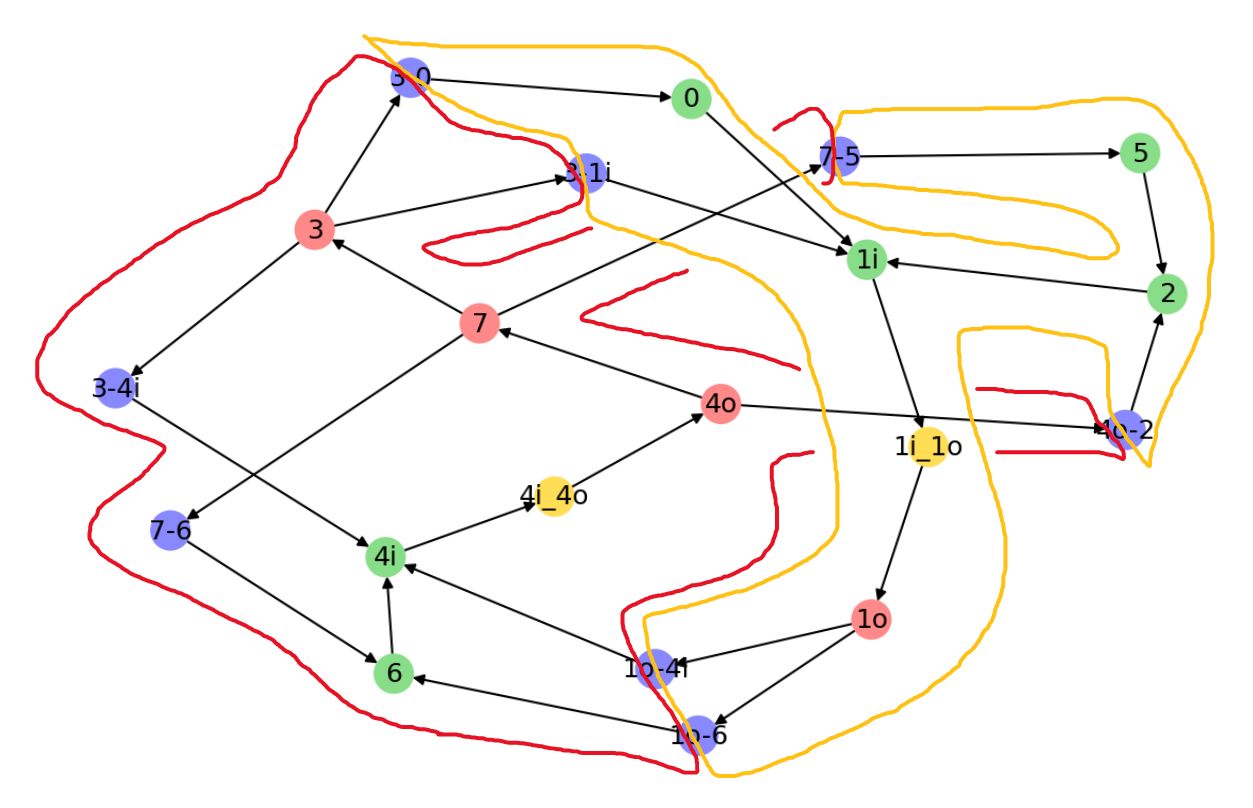

Below is the diagram rendered by mermaid showing the structure of the full flow:

%%{init: {'theme': 'base', 'themeVariables': {'clusterBkg':'#ddd'}}}%%

flowchart TD

classDef pullClass fill:#02f,color:#fff,stroke:#000

classDef pushClass fill:#ff0,stroke:#000

linkStyle default stroke:#aaa,stroke-width:4px,color:red,font-size:1.5em;

subgraph "sg_1v1 stratum 0"

1v1[\"(1v1) <tt>source_iter(vec! [0])</tt>"/]:::pullClass

2v1[\"(2v1) <tt>source_stream(edges_recv)</tt>"/]:::pullClass

3v1[\"(3v1) <tt>merge()</tt>"/]:::pullClass

7v1[\"(7v1) <tt>map(| v | (v, ()))</tt>"/]:::pullClass

4v1[\"(4v1) <tt>join()</tt>"/]:::pullClass

5v1[/"(5v1) <tt>flat_map(| (src, ((), dst)) | [src, dst])</tt>"\]:::pushClass

6v1[/"(6v1) <tt>tee()</tt>"\]:::pushClass

10v1["(10v1) <tt>handoff</tt>"]:::otherClass

10v1--1--->3v1

1v1--0--->3v1

2v1--1--->4v1

3v1--->7v1

7v1--0--->4v1

4v1--->5v1

5v1--->6v1

6v1--0--->10v1

end

subgraph "sg_2v1 stratum 1"

8v1[/"(8v1) <tt>unique()</tt>"\]:::pushClass

9v1[/"(9v1) <tt>for_each(| x | println! ("Reached: {}", x))</tt>"\]:::pushClass

8v1--->9v1

end

6v1--1--->11v1

11v1["(11v1) <tt>handoff</tt>"]:::otherClass

11v1===o8v1

This is similar to the flow for graph neighbors, but has a few more operators that make it look

more complex. In particular, it includes the merge and tee operators, and a cycle-forming back-edge

that passes through an auto-generated handoff operator. This handoff is not a stratum boundary (after all, it connects stratum 0 to itself!) It simply enforces the rule that a push producer and a pull consumer must be separated by a handoff.

Meanwhile, note that there is once again a stratum boundary between the stratum 0 with its recursive loop, and stratum 1 that computes unique, with the blocking input. This means that Hydroflow will first run the loop of stratum 0 repeatedly until all the transitive reached vertices are found, before moving on to compute the unique reached vertices.

Graph Un-Reachability

In this example we cover:

- Extending a program with additional downstream logic.

- Hydroflow's (

difference) operator- Further examples of automatic stratification.

Our next example builds on the previous by finding vertices that are not reachable. To do this, we need to capture the set all_vertices, and use a difference operator to form the difference between that set of vertices and reachable_vertices.

Essentially we want a flow like this:

graph TD

subgraph sources

01[Stream of Edges]

end

subgraph reachable from origin

00[Origin Vertex]

10[Reached Vertices]

20("V ⨝ E")

00 --> 10

10 --> 20

20 --> 10

01 --> 20

end

subgraph unreachable

15[All Vertices]

30(All - Reached)

01 ---> 15

15 --> 30

10 --> 30

30 --> 40

end

40[Output]

This is a simple augmentation of our previous example. Replace the contents of src/main.rs with the following:

use hydroflow::hydroflow_syntax; pub fn main() { // An edge in the input data = a pair of `usize` vertex IDs. let (pairs_send, pairs_recv) = hydroflow::util::unbounded_channel::<(usize, usize)>(); let mut flow = hydroflow_syntax! { origin = source_iter(vec![0]); stream_of_edges = source_stream(pairs_recv) -> tee(); reached_vertices = merge()->tee(); origin -> [0]reached_vertices; // the join for reachable vertices my_join = join() -> flat_map(|(src, ((), dst))| [src, dst]); reached_vertices[0] -> map(|v| (v, ())) -> [0]my_join; stream_of_edges[1] -> [1]my_join; // the loop my_join -> [1]reached_vertices; // the difference all_vertices - reached_vertices all_vertices = stream_of_edges[0] -> flat_map(|(src, dst)| [src, dst]) -> tee(); unreached_vertices = difference(); all_vertices[0] -> [pos]unreached_vertices; reached_vertices[1] -> [neg]unreached_vertices; // the output all_vertices[1] -> unique() -> for_each(|v| println!("Received vertex: {}", v)); unreached_vertices -> for_each(|v| println!("unreached_vertices vertex: {}", v)); }; println!( "{}", flow.meta_graph() .expect("No graph found, maybe failed to parse.") .to_mermaid() ); pairs_send.send((5, 10)).unwrap(); pairs_send.send((0, 3)).unwrap(); pairs_send.send((3, 6)).unwrap(); pairs_send.send((6, 5)).unwrap(); pairs_send.send((11, 12)).unwrap(); flow.run_available(); }

Notice that we are now sending in some new pairs to test this code. The output should be:

% cargo run

<build output>

<graph output>

Received vertex: 12

Received vertex: 6

Received vertex: 11

Received vertex: 0

Received vertex: 5

Received vertex: 10

Received vertex: 3

unreached_vertices vertex: 12

unreached_vertices vertex: 11

Let's review the changes, all of which come at the end of the program. First,

we remove code to print reached_vertices. Then we define all_vertices to be

the vertices that appear in any edge (using familiar flat_map code from the previous

examples.) In the last few lines, we wire up a

difference operator

to compute the difference between all_vertices and reached_vertices; note

how we wire up the pos and neg inputs to the difference operator!

Finally we print both all_vertices and unreached_vertices.

The auto-generated mermaid looks like so:

%%{init: {'theme': 'base', 'themeVariables': {'clusterBkg':'#ddd'}}}%%

flowchart TD

classDef pullClass fill:#02f,color:#fff,stroke:#000

classDef pushClass fill:#ff0,stroke:#000

linkStyle default stroke:#aaa,stroke-width:4px,color:red,font-size:1.5em;

subgraph "sg_1v1 stratum 0"

1v1[\"(1v1) <tt>source_iter(vec! [0])</tt>"/]:::pullClass

8v1[\"(8v1) <tt>map(| v | (v, ()))</tt>"/]:::pullClass

6v1[\"(6v1) <tt>join()</tt>"/]:::pullClass

7v1[\"(7v1) <tt>flat_map(| (src, ((), dst)) | [src, dst])</tt>"/]:::pullClass

4v1[\"(4v1) <tt>merge()</tt>"/]:::pullClass

5v1[/"(5v1) <tt>tee()</tt>"\]:::pushClass

15v1["(15v1) <tt>handoff</tt>"]:::otherClass

15v1--->8v1

1v1--0--->4v1

8v1--0--->6v1

6v1--->7v1

7v1--1--->4v1

4v1--->5v1

5v1--0--->15v1

end

subgraph "sg_2v1 stratum 0"

2v1[\"(2v1) <tt>source_stream(pairs_recv)</tt>"/]:::pullClass

3v1[/"(3v1) <tt>tee()</tt>"\]:::pushClass

9v1[/"(9v1) <tt>flat_map(| (src, dst) | [src, dst])</tt>"\]:::pushClass

10v1[/"(10v1) <tt>tee()</tt>"\]:::pushClass

2v1--->3v1

3v1--0--->9v1

9v1--->10v1

end

subgraph "sg_3v1 stratum 1"

12v1[/"(12v1) <tt>unique()</tt>"\]:::pushClass

13v1[/"(13v1) <tt>for_each(| v | println! ("Received vertex: {}", v))</tt>"\]:::pushClass

12v1--->13v1

end

subgraph "sg_4v1 stratum 1"

11v1[\"(11v1) <tt>difference()</tt>"/]:::pullClass

14v1[/"(14v1) <tt>for_each(| v | println! ("unreached_vertices vertex: {}", v))</tt>"\]:::pushClass

11v1--->14v1

end

3v1--1--->16v1

5v1--1--->18v1

10v1--0--->17v1

10v1--1--->19v1

16v1["(16v1) <tt>handoff</tt>"]:::otherClass

16v1--1--->6v1

17v1["(17v1) <tt>handoff</tt>"]:::otherClass

17v1--pos--->11v1

18v1["(18v1) <tt>handoff</tt>"]:::otherClass

18v1==neg===o11v1

19v1["(19v1) <tt>handoff</tt>"]:::otherClass

19v1===o12v1

If you look carefully, you'll see two subgraphs labeled with stratum 0, and two with

stratum 1. The reason the strata were broken into subgraphs has nothing to do with

correctness, but rather the way that Hydroflow graphs are compiled and scheduled, as

discussed in the chapter on Architecture.

All the subgraphs labeled stratum 0 are run first to completion,

and then all the subgraphs labeled stratum 1 are run. This captures the requirements of the unique and difference operators used in the lower subgraphs: each has to wait for its full inputs before it can start producing output. Note

how the difference operator has two inputs (labeled pos and neg), and only the neg input shows up as blocking (with the bold edge ending in a ball).

Networked Services 1: EchoServer

In this example we cover:

- The standard project template for networked Hydroflow services.

- Rust's

clapcrate for command-line options- Defining message types

- Destination operators (e.g. for sending data to a network)

- Network sources and dests with built-in serde (

source_stream_serde,dest_sink_serde)- The

source_stdinsource- Long-running services via

run_async

Our examples up to now have been simple single-node programs, to get us comfortable with Hydroflow's surface syntax. But the whole point of Hydroflow is to help us write distributed programs or services that run on a cluster of machines!

In this example we'll study the "hello, world" of distributed systems -- a simple echo server. It will listen on a UDP port, and send back a copy of any message it receives, with a timestamp. We will also look at a client to accept strings from the command line, send them to the echo server, and print responses.

We will use a fresh hydroflow-template project template to get started. Change to the directory where you'd like to put your project, and once again run:

cargo generate hydro-project/hydroflow-template

Then change directory into the resulting project.

The README.md for the template project is a good place to start. It contains a brief overview of the project structure, and how to build and run the example. Here we'll spend more time learning from the code.

Hydroflow Project Structure

The Hydroflow template project auto-generates this example for us. If you prefer, you can find the source in the examples/echo_server directory of the Hydroflow repository.

The directory structure encouraged by the template is as follows:

project/README.md # documentation

project/Cargo.toml # package and dependency info

project/src/main.rs # main function

project/src/protocol.rs # message types exchanged between roles

project/src/helpers.rs # helper functions used by all roles

project/src/<roleA>.rs # service definition for role A (e.g. server)

project/src/<roleB>.rs # service definition for role B (e.g. client)

In the default example, the roles we use are Client and Server, but you can imagine different roles depending on the structure of your service or application.

main.rs

We start with a main function that parses command-line options, and invokes the appropriate

role-specific service.

After a prelude of imports, we start by defining a Rust enum for the Roles that the service supports.

use clap::{Parser, ValueEnum};

use client::run_client;

use hydroflow::tokio;

use hydroflow::util::{bind_udp_bytes, ipv4_resolve};

use server::run_server;

use std::net::SocketAddr;

mod client;

mod helpers;

mod protocol;

mod server;

#[derive(Clone, ValueEnum, Debug)]

enum Role {

Client,

Server,

}Following that, we use Rust's clap (Command Line Argument Parser) crate to parse command-line options:

#[derive(Parser, Debug)]

struct Opts {

#[clap(value_enum, long)]

role: Role,

#[clap(long, value_parser = ipv4_resolve)]

addr: Option<SocketAddr>,

#[clap(long, value_parser = ipv4_resolve)]

server_addr: Option<SocketAddr>,

}This sets up 3 command-line flags: role, addr, and server_addr. Note how the addr and server_addr flags are made optional via wrapping in a Rust Option; by contrast, the role option is required. The clap crate will parse the command-line options and populate the Opts struct with the values. clap handles parsing the command line strings into the associated Rust types -- the value_parser attribute tells clap to use Hydroflow's ipv4_resolve helper function to parse a string like "127.0.0.1:6552" into a SocketAddr.

This brings us to the main function itself. It is prefaced by a #[tokio::main] attribute, which is a macro that sets up the tokio runtime. This is necessary because Hydroflow uses the tokio runtime for asynchronous execution as a service.

#[tokio::main]

async fn main() {

// parse command line arguments

let opts = Opts::parse();

// if no addr was provided, we ask the OS to assign a local port by passing in "localhost:0"

let addr = opts

.addr

.unwrap_or_else(|| ipv4_resolve("localhost:0").unwrap());

// allocate `outbound` sink and `inbound` stream

let (outbound, inbound, addr) = bind_udp_bytes(addr).await;

println!("Listening on {:?}", addr);After parsing the command line arguments we set up some Rust-based networking. Specifically, for either client or server roles we will need to allocate a UDP socket that is used for both sending and receiving messages. We do this by calling the async bind_udp_bytes function, which is defined in the hydroflow/src/util module. As an async function it returns a Future, so requires appending .await; the function returns a triple of type (UdpSink, UdpSource, SocketAddr). The first two are the types that we'll use in Hydroflow to send and receive messages, respectively. (Note: your IDE might expand out the UdpSink and UdpSource traits to their more verbose definitions. That is fine; you can ignore those.) The SocketAddr is there in case you specified port 0 in your addr argument, in which case this return value tells you what port the OS has assigned for you.

All that's left is to fire up the code for the appropriate role!

match opts.role {

Role::Server => {

run_server(outbound, inbound, opts).await;

}

Role::Client => {

run_client(outbound, inbound, opts).await;

}

}

}protocol.rs

As a design pattern, it is natural in distributed Hydroflow programs to define various message types in a protocol.rs file with structures shared for use by all the Hydroflow logic across roles. In this simple example, we define only one message type: EchoMsg, and a simple struct with two fields: payload and ts (timestamp). The payload field is a string, and the ts field is a DateTime<Utc>, which is a type from the chrono crate. Note the various derived traits on EchoMsg—specifically Serialize and Deserialize—these are required for structs that we send over the network.

use chrono::prelude::*;

use serde::{Deserialize, Serialize};

#[derive(PartialEq, Clone, Serialize, Deserialize, Debug)]

pub struct EchoMsg {

pub payload: String,

pub ts: DateTime<Utc>,

}server.rs

Things get interesting when we look at the run_server function. This function is the main entry point for the server. It takes as arguments the outbound and inbound sockets from main, and the Opts (which are ignored).

After printing a cheery message, we get into the Hydroflow code for the server, consisting of three short pipelines.

use crate::protocol::EchoMsg;

use chrono::prelude::*;

use hydroflow::hydroflow_syntax;

use hydroflow::scheduled::graph::Hydroflow;

use hydroflow::util::{UdpSink, UdpStream};

use std::net::SocketAddr;

pub(crate) async fn run_server(outbound: UdpSink, inbound: UdpStream, _opts: crate::Opts) {

println!("Server live!");

let mut flow: Hydroflow = hydroflow_syntax! {

// Define a shared inbound channel

inbound_chan = source_stream_serde(inbound) -> tee();

// Print all messages for debugging purposes

inbound_chan[0]

-> for_each(|(msg, addr): (EchoMsg, SocketAddr)| println!("{}: Got {:?} from {:?}", Utc::now(), msg, addr));

// Echo back the Echo messages with updated timestamp

inbound_chan[1]

-> map(|(EchoMsg {payload, ..}, addr)| (EchoMsg { payload, ts: Utc::now() }, addr) ) -> dest_sink_serde(outbound);

};

// run the server

flow.run_async().await;

}Lets take the Hydroflow code one statement at a time.

The first pipeline, inbound_chan uses a source operator we have not seen before, source_stream_serde(). This is a streaming source like source_stream, but for network streams. It takes a UdpSource as an argument, and has a particular output type: a stream of (T, SocketAddr) pairs where T is some type that implements the Serialize and Deserialize traits (together known as "serde"), and SocketAddr is the network address of the sender of the item. In this case, T is EchoMsg, which we defined in protocol.rs, and the SocketAddr is the address of the client that sent the message. We pipe the result into a tee() for reuse.

The second pipeline is a simple for_each to print the messages received at the server.

The third and final pipeline constructs a response EchoMsg with the local timestamp copied in. It then pipes the result into a dest_XXX operator—the first that we've seen! A dest is the opposite of a source_XXX operator: it can go at the end of a pipeline and sends data out on a tokio channel. The specific operator used here is dest_sink_serde(). This is a dest operator like dest_sink, but for network streams. It takes a UdpSink as an argument, and requires a particular input type: a stream of (T, SocketAddr) pairs where T is some type that implements the Serialize and Deserialize traits, and SocketAddr is the network address of the destination. In this case, T is once again EchoMsg, and the SocketAddr is the address of the client that sent the original message.

The remaining line of code runs the server. The run_async() function is a method on the Hydroflow type. It is an async function, so we append .await to the call. The program will block on this call until the server is terminated.

client.rs

The client begins by making sure the user specified a server address at the command line. After printing a message to the terminal, it constructs a hydroflow graph.

Again, we start the hydroflow code defining shared inbound and outbound channels. The code here is simplified compared

to the server because the inbound_chan and outbound_chan are each referenced only once, so they do not require tee or merge operators, respectively (they have been commented out).

The inbound_chan drives a pipeline that prints messages to the screen.

Only the last pipeline is novel for us by now. It uses another new source operator source_stdin(), which does what you might expect: it streams lines of text as they arrive from stdin (i.e. as they are typed into a terminal). It then uses a map to construct an EchoMsg with each line of text and the current timestamp. The result is piped into a sink operator dest_sink_serde(), which sends the message to the server.

The client logic ends by launching the flow graph with flow.run_async().await.unwrap().

use crate::protocol::EchoMsg;

use crate::Opts;

use chrono::prelude::*;

use hydroflow::hydroflow_syntax;

use hydroflow::util::{UdpSink, UdpStream};

use std::net::SocketAddr;

pub(crate) async fn run_client(outbound: UdpSink, inbound: UdpStream, opts: Opts) {

// server_addr is required for client

let server_addr = opts.server_addr.expect("Client requires a server address");

println!("Client live!");

let mut flow = hydroflow_syntax! {

// Define shared inbound and outbound channels

inbound_chan = source_stream_serde(inbound)

// -> tee() // commented out since we only use this once in the client template

;

outbound_chan = // merge() -> // commented out since we only use this once in the client template

dest_sink_serde(outbound);

// Print all messages for debugging purposes

inbound_chan

-> for_each(|(m, a): (EchoMsg, SocketAddr)| println!("{}: Got {:?} from {:?}", Utc::now(), m, a));

// take stdin and send to server as an Message::Echo

source_stdin() -> map(|l| (EchoMsg{ payload: l.unwrap(), ts: Utc::now(), }, server_addr) )

-> outbound_chan;

};

flow.run_async().await.unwrap();

}Running the example

As described in the README.md file, we can run the server in one terminal, and the client in another. The server will print the messages it receives, and the client will print the messages it receives back from the server. The client and servers' `--server-addr' arguments need to match or this won't work!

Fire up the server in terminal 1:

% cargo run -p hydroflow --example echoserver -- --role server --addr localhost:12347

Then start the client in terminal 2 and type some messages!

% cargo run -p hydroflow --example echoserver -- --role client --server-addr localhost:12347

Listening on 127.0.0.1:54532

Connecting to server at 127.0.0.1:12347

Client live!

This is a test

2022-12-15 05:40:11.258052 UTC: Got Echo { payload: "This is a test", ts: 2022-12-15T05:40:11.257145Z } from 127.0.0.1:12347

This is the rest

2022-12-15 05:40:14.025216 UTC: Got Echo { payload: "This is the rest", ts: 2022-12-15T05:40:14.023577Z } from 127.0.0.1:12347

And have a look back at the server console!

Listening on 127.0.0.1:12347

Server live!

2022-12-15 05:40:11.256640 UTC: Got Echo { payload: "This is a test", ts: 2022-12-15T05:40:11.254207Z } from 127.0.0.1:54532

2022-12-15 05:40:14.023363 UTC: Got Echo { payload: "This is the rest", ts: 2022-12-15T05:40:14.020897Z } from 127.0.0.1:54532

Networked Services 2: Chat Server

In this example we cover:

- Multiple message types and the

demuxoperator.- A broadcast pattern via the

cross_joinoperator.- One-time bootstrapping pipelines

- A "gated buffer" pattern via

cross_joinwith a single-object input.

Our previous echo server example was admittedly simplistic. In this example, we'll build something a bit more useful: a simple chat server. We will again have two roles: a Client and a Server. Clients will register their presence with the Server, which maintains a list of clients. Each Client sends messages to the Server, which will then broadcast those messages to all other clients.

main.rs

The main.rs file here is very similar to that of the echo server, just with two new command-line arguments: one called name for a "nickname" in the chatroom, and another optional argument graph for printing a dataflow graph if desired. To follow along, you can copy the contents of this file into the src/main.rs file of your template.

use clap::{Parser, ValueEnum};

use client::run_client;

use hydroflow::tokio;

use hydroflow::util::{bind_udp_bytes, ipv4_resolve};

use server::run_server;

use std::net::SocketAddr;

mod client;

mod protocol;

mod server;

#[derive(Clone, ValueEnum, Debug)]

enum Role {

Client,

Server,

}

#[derive(Clone, ValueEnum, Debug)]

enum GraphType {

Mermaid,

Dot,

Json,

}

#[derive(Parser, Debug)]

struct Opts {

#[clap(long)]

name: String,

#[clap(value_enum, long)]

role: Role,

#[clap(long, value_parser = ipv4_resolve)]

client_addr: Option<SocketAddr>,

#[clap(long, value_parser = ipv4_resolve)]

server_addr: Option<SocketAddr>,

#[clap(value_enum, long)]

graph: Option<GraphType>,

}

#[tokio::main]

async fn main() {

let opts = Opts::parse();

// if no addr was provided, we ask the OS to assign a local port by passing in "localhost:0"

let addr = opts

.addr

.unwrap_or_else(|| ipv4_resolve("localhost:0").unwrap());

// allocate `outbound` sink and `inbound` stream

let (outbound, inbound, addr) = bind_udp_bytes(addr).await;

println!("Listening on {:?}", addr);

match opts.role {

Role::Client => {

run_client(outbound, inbound, opts).await;

}

Role::Server => {

run_server(outbound, inbound, opts).await;

}

}

}protocol.rs

Our protocol file here expands upon what we saw with the echoserver by defining multiple message types.

Replace the template contents of src/protocol.rs with the following:

use chrono::prelude::*;

use serde::{Deserialize, Serialize};

#[derive(PartialEq, Eq, Clone, Serialize, Deserialize, Debug)]

pub enum Message {

ConnectRequest,

ConnectResponse,

ChatMsg {

nickname: String,

message: String,

ts: DateTime<Utc>,

},

}Note how we use a single Rust enum to represent all varieties of message types; this allows us to handle Messages of different types with a single Rust network channel. We will use the demux operator to separate out these different message types on the receiving end.

The ConnectRequest and ConnectResponse messages have no payload;

the address of the sender and the type of the message will be sufficient information. The ChatMsg message type has a nickname field, a message field, and a ts

field for the timestamp. Once again we use the chrono crate to represent timestamps.

server.rs

The chat server is nearly as simple as the echo server. The main differences are (a) we need to handle multiple message types, (b) we need to keep track of the list of clients, and (c) we need to broadcast messages to all clients.

To follow along, replace the contents of src/server.rs with the code below:

use crate::{GraphType, Opts};

use hydroflow::util::{UdpSink, UdpStream};

use crate::protocol::Message;

use hydroflow::hydroflow_syntax;

use hydroflow::scheduled::graph::Hydroflow;

pub(crate) async fn run_server(outbound: UdpSink, inbound: UdpStream, opts: Opts) {

println!("Server live!");

let mut df: Hydroflow = hydroflow_syntax! {

// Define shared inbound and outbound channels

outbound_chan = merge() -> dest_sink_serde(outbound);

inbound_chan = source_stream_serde(inbound)

-> demux(|(msg, addr), var_args!(clients, msgs, errs)|

match msg {

Message::ConnectRequest => clients.give(addr),

Message::ChatMsg {..} => msgs.give(msg),

_ => errs.give(msg),

}

);

clients = inbound_chan[clients] -> tee();

inbound_chan[errs] -> for_each(|m| println!("Received unexpected message type: {:?}", m));After a short prelude, we have the Hydroflow code near the top of run_server(). It begins by defining outbound_chan as a merged destination sink for network messages. Then we get to the

more interesting inbound_chan definition.

The inbound channel is a source stream that will carry many

types of Messages. We use the demux operator to partition the stream objects into three channels. The clients channel

will carry the addresses of clients that have connected to the server. The msgs channel will carry the ChatMsg messages that clients send to the server.

The errs channel will carry any other messages that clients send to the server.

Note the structure of the demux operator: it takes a closure on

(Message, SocketAddr) pairs, and a variadic tuple (var_args!) of output channel names—in this case clients, msgs, and errs. The closure is basically a big

Rust pattern match, with one arm for each output channel name given in the variadic tuple. Note

that the different output channels can have different-typed messages! Note also that we destructure the incoming Message types into tuples of fields. (If we didn't we'd either have to write boilerplate code for each message type in every downstream pipeline, or face Rust's dreaded refutable pattern error!)

The remainder of the server consists of two independent pipelines, the code to print out the flow graph,

and the code to run the flow graph. To follow along, paste the following into the bottom of your src/server.rs file:

// Pipeline 1: Acknowledge client connections

clients[0] -> map(|addr| (Message::ConnectResponse, addr)) -> [0]outbound_chan;

// Pipeline 2: Broadcast messages to all clients

broadcast = cross_join() -> [1]outbound_chan;

inbound_chan[msgs] -> [0]broadcast;

clients[1] -> [1]broadcast;

};

if let Some(graph) = graph {

let serde_graph = df

.meta_graph()

.expect("No graph found, maybe failed to parse.");

match graph {

GraphType::Mermaid => {

println!("{}", serde_graph.to_mermaid());

}

GraphType::Dot => {

println!("{}", serde_graph.to_dot())

}

GraphType::Json => {

unimplemented!();

// println!("{}", serde_graph.to_json())

}

}

}

df.run_async().await.unwrap();

}The first pipeline is one line long,

and is responsible for acknowledging requests from clients: it takes the address of the incoming Message::ConnectRequest

and sends a ConnectResponse back to that address. The second pipeline is responsible for broadcasting

all chat messages to all clients. This all-to-all pairing corresponds to the notion of a cartesian product

or cross_join in Hydroflow. The cross_join operator takes two input

channels and produces a single output channel with a tuple for each pair of inputs, in this case it produces

(Message, SocketAddr) pairs. Conveniently, that is exactly the structure needed for sending to the outbound_chan sink!

We call the cross-join pipeline broadcast because it effectively broadcasts all messages to all clients.

The mermaid graph for the server is below. The three branches of the demux are very clear toward the top. Note also the tee of the clients channel

for both ClientResponse and broadcasting, and the merge of all outbound messages into dest_sink_serde.

%%{init: {'theme': 'base', 'themeVariables': {'clusterBkg':'#ddd'}}}%%

flowchart TD

classDef pullClass fill:#02f,color:#fff,stroke:#000

classDef pushClass fill:#ff0,stroke:#000

linkStyle default stroke:#aaa,stroke-width:4px,color:red,font-size:1.5em;

subgraph "sg_1v1 stratum 0"

7v1[\"(7v1) <tt>map(| addr | (Message :: ConnectResponse, addr))</tt>"/]:::pullClass

8v1[\"(8v1) <tt>cross_join()</tt>"/]:::pullClass

1v1[\"(1v1) <tt>merge()</tt>"/]:::pullClass

2v1[/"(2v1) <tt>dest_sink_serde(outbound)</tt>"\]:::pushClass

7v1--0--->1v1

8v1--1--->1v1

1v1--->2v1

end

subgraph "sg_2v1 stratum 0"

3v1[\"(3v1) <tt>source_stream_serde(inbound)</tt>"/]:::pullClass

4v1[/"(4v1) <tt>demux(| (msg, addr), var_args! (clients, msgs, errs) | match msg<br>{<br> Message :: ConnectRequest => clients.give(addr), Message :: ChatMsg { .. }<br> => msgs.give(msg), _ => errs.give(msg),<br>})</tt>"\]:::pushClass

5v1[/"(5v1) <tt>tee()</tt>"\]:::pushClass

6v1[/"(6v1) <tt>for_each(| m | println! ("Received unexpected message type: {:?}", m))</tt>"\]:::pushClass

3v1--->4v1

4v1--clients--->5v1

4v1--errs--->6v1

end

4v1--msgs--->10v1

5v1--0--->9v1

5v1--1--->11v1

9v1["(9v1) <tt>handoff</tt>"]:::otherClass

9v1--->7v1

10v1["(10v1) <tt>handoff</tt>"]:::otherClass

10v1--0--->8v1

11v1["(11v1) <tt>handoff</tt>"]:::otherClass

11v1--1--->8v1

client.rs

The chat client is not very different from the echo server client, with two new design patterns:

- a degenerate

source_iterpipeline that runs once as a "bootstrap" in the first tick - the use of

cross_joinas a "gated buffer" to postpone sending messages.

We also include a Rust helper routine pretty_print_msg for formatting output.

The prelude of the file is almost the same as the echo server client, with the addition of a crate for

handling colored text output. This is followed by the pretty_print_msg function, which is fairly self-explanatory.

To follow along, start by replacing the contents of src/client.rs with the following:

use crate::protocol::Message;

use crate::{GraphType, Opts};

use chrono::prelude::*;

use hydroflow::hydroflow_syntax;

use hydroflow::util::{UdpSink, UdpStream};

use chrono::Utc;

use colored::Colorize;

fn pretty_print_msg(msg: Message) {

if let Message::ChatMsg {

nickname,

message,

ts,

} = msg

{

println!(

"{} {}: {}",

ts.with_timezone(&Local)

.format("%b %-d, %-I:%M:%S")

.to_string()

.truecolor(126, 126, 126)

.italic(),

nickname.green().italic(),

message,

);

}

}This brings us to the run_client function. As in run_server we begin by ensuring the server address

is supplied. We then have the hydroflow code starting with a standard pattern of a merged outbound_chan,

and a demuxed inbound_chan. The client handles only two inbound Message types: Message::ConnectResponse and Message::ChatMsg.

Paste the following to the bottom of src/client.rs:

pub(crate) async fn run_client(outbound: UdpSink, inbound: UdpStream, opts: Opts) {

// server_addr is required for client

let server_addr = opts.server_addr.expect("Client requires a server address");

println!("Client live!");

let mut hf = hydroflow_syntax! {

// set up channels

outbound_chan = merge() -> dest_sink_serde(outbound);

inbound_chan = source_stream_serde(inbound) -> map(|(m, _)| m)

-> demux(|m, var_args!(acks, msgs, errs)|

match m {

Message::ConnectResponse => acks.give(m),

Message::ChatMsg {..} => msgs.give(m),

_ => errs.give(m),

}

);

inbound_chan[errs] -> for_each(|m| println!("Received unexpected message type: {:?}", m));The core logic of the client consists of three dataflow pipelines shown below. Paste this into the

bottom of your src/client.rs file.

// send a single connection request on startup

source_iter([()]) -> map(|_m| (Message::ConnectRequest, server_addr)) -> [0]outbound_chan;

// take stdin and send to server as a msg

// the cross_join serves to buffer msgs until the connection request is acked

msg_send = cross_join() -> map(|(msg, _)| (msg, server_addr)) -> [1]outbound_chan;

lines = source_stdin()

-> map(|l| Message::ChatMsg {

nickname: opts.name.clone(),

message: l.unwrap(),

ts: Utc::now()})

-> [0]msg_send;

inbound_chan[acks] -> [1]msg_send;

// receive and print messages

inbound_chan[msgs] -> for_each(pretty_print_msg);

};-

The first pipeline is the "bootstrap" alluded to above. It starts with

source_iteroperator that emits a single, opaque "unit" (()) value. This value is available when the client begins, which means this pipeline runs once, immediately on startup, and generates a singleConnectRequestmessage which is sent to the server. -

The second pipeline reads from

source_stdinand sends messages to the server. It differs from our echo-server example in the use of across_joinwithinbound_chan[acks]. This cross-join is similar to that of the server: it forms pairs between all messages and all servers that send aConnectResponseack. In principle this means that the client is broadcasting each message to all servers. In practice, however, the client establishes at most one connection to a server. Hence over time, this pipeline starts with zeroConnectResponses and is sending no messages; subsequently it receives a singleConnectResponseand starts sending messages. Thecross_joinis thus effectively a buffer for messages, and a "gate" on that buffer that opens when the client receives its soleConnectResponse. -

The final pipeline simply pretty-prints the messages received from the server.

Finish up the file by pasting the code below for optionally generating the graph and running the flow:

// optionally print the dataflow graph

if let Some(graph) = opts.graph {

let serde_graph = hf

.meta_graph()

.expect("No graph found, maybe failed to parse.");

match graph {

GraphType::Mermaid => {

println!("{}", serde_graph.to_mermaid());

}

GraphType::Dot => {

println!("{}", serde_graph.to_dot())

}

GraphType::Json => {

unimplemented!();

}

}

}

hf.run_async().await.unwrap();

}The client's mermaid graph looks a bit different than the server's, mostly because it routes some data to the screen rather than to an outbound network channel.

%%{init: {'theme': 'base', 'themeVariables': {'clusterBkg':'#ddd'}}}%%

flowchart TD

classDef pullClass fill:#02f,color:#fff,stroke:#000

classDef pushClass fill:#ff0,stroke:#000

linkStyle default stroke:#aaa,stroke-width:4px,color:red,font-size:1.5em;

subgraph "sg_1v1 stratum 0"

7v1[\"(7v1) <tt>source_iter([()])</tt>"/]:::pullClass

8v1[\"(8v1) <tt>map(| _m | (Message :: ConnectRequest, server_addr))</tt>"/]:::pullClass

11v1[\"(11v1) <tt>source_stdin()</tt>"/]:::pullClass

12v1[\"(12v1) <tt>map(| l | Message :: ChatMsg<br>{ nickname : opts.name.clone(), message : l.unwrap(), ts : Utc :: now() })</tt>"/]:::pullClass

9v1[\"(9v1) <tt>cross_join()</tt>"/]:::pullClass

10v1[\"(10v1) <tt>map(| (msg, _) | (msg, server_addr))</tt>"/]:::pullClass

1v1[\"(1v1) <tt>merge()</tt>"/]:::pullClass

2v1[/"(2v1) <tt>dest_sink_serde(outbound)</tt>"\]:::pushClass

7v1--->8v1

8v1--0--->1v1

11v1--->12v1

12v1--0--->9v1

9v1--->10v1

10v1--1--->1v1

1v1--->2v1

end

subgraph "sg_2v1 stratum 0"

3v1[\"(3v1) <tt>source_stream_serde(inbound)</tt>"/]:::pullClass

4v1[/"(4v1) <tt>map(| (m, _) | m)</tt>"\]:::pushClass

5v1[/"(5v1) <tt>demux(| m, var_args! (acks, msgs, errs) | match m<br>{<br> Message :: ConnectResponse => acks.give(m), Message :: ChatMsg { .. } =><br> msgs.give(m), _ => errs.give(m),<br>})</tt>"\]:::pushClass

6v1[/"(6v1) <tt>for_each(| m | println! ("Received unexpected message type: {:?}", m))</tt>"\]:::pushClass

13v1[/"(13v1) <tt>for_each(pretty_print_msg)</tt>"\]:::pushClass

3v1--->4v1

4v1--->5v1

5v1--errs--->6v1

5v1--msgs--->13v1

end

5v1--acks--->14v1

14v1["(14v1) <tt>handoff</tt>"]:::otherClass

14v1--1--->9v1

Running the example

As described in hydroflow/hydroflow/example/chat/README.md, we can run the server in one terminal, and run clients in additional terminals.

The client and server need to agree on server-addr or this won't work!

Fire up the server in terminal 1:

% cargo run -p hydroflow --example chat -- --name "_" --role server --server-addr 127.0.0.1:12347

Start client "alice" in terminal 2 and type some messages, and you'll see them echoed back to you. This will appear in colored fonts in most terminals (but unfortunately not in this markdown-based book!)

% cargo run -p hydroflow --example chat -- --name "alice" --role client --server-addr 127.0.0.1:12347

Listening on 127.0.0.1:50617

Connecting to server at 127.0.0.1:12347

Client live!

Hello (hello hello) ... is there anybody in here?

Dec 13, 12:04:34 alice: Hello (hello hello) ... is there anybody in here?

Just nod if you can hear me.

Dec 13, 12:04:58 alice: Just nod if you can hear me.

Is there anyone home?

Dec 13, 12:05:01 alice: Is there anyone home?

Now start client "bob" in terminal 3, and notice how he instantly receives the backlog of Alice's messages from the server's cross_join.

(The messages may not be printed in the same order as they were timestamped! The cross_join operator is not guaranteed to preserve order, nor

is the udp network. Fixing these issues requires extra client logic that we leave as an exercise to the reader.)

% cargo run -p hydroflow --example chat -- --name "bob" --role client --server-addr 127.0.0.1:12347

Listening on 127.0.0.1:63018

Connecting to server at 127.0.0.1:12347

Client live!

Dec 13, 12:05:01 alice: Is there anyone home?

Dec 13, 12:04:58 alice: Just nod if you can hear me.

Dec 13, 12:04:34 alice: Hello (hello hello) ... is there anybody in here?

Now in terminal 3, Bob can respond:

*nods*

Dec 13, 12:05:05 bob: *nods*

and if we go back to terminal 2 we can see that Alice gets the message too:

Dec 13, 12:05:05 bob: *nods*

Hydroflow Surface Syntax

The natural way to write a Hydroflow program is using the Surface Syntax documented here.

It is a chained Iterator-style syntax of operators built into Hydroflow that should be sufficient

for most uses. If you want lower-level access you can work with the Core API documented in the Architecture section.

In this chapter we go over the syntax piece by piece: how to embed surface syntax in Rust and how to specify flows, which consist of data sources flowing through operators.

As a teaser, here is a Rust/Hydroflow "HELLO WORLD" program:

#![allow(unused)] fn main() { use hydroflow::hydroflow_syntax; pub fn test_hello_world() { let mut df = hydroflow_syntax! { source_iter(vec!["hello", "world"]) -> map(|x| x.to_uppercase()) -> for_each(|x| println!("{}", x)); }; df.run_available(); } }

Embedding a Flow in Rust

Hydroflow's surface syntax is typically used within a Rust program. (An interactive client and/or external language bindings are TBD.)

The surface syntax is embedded into Rust via a macro as follows

#![allow(unused)] fn main() { use hydroflow::hydroflow_syntax; pub fn example() { let mut flow = hydroflow_syntax! { // Hydroflow Surface Syntax goes here }; } }

The resulting flow object is of type Hydroflow.

Flow Syntax

Flows consist of named operators that are connected via flow edges denoted by ->. The example below

uses the source_iter operator to generate two strings from a Rust vec, the

map operator to apply some Rust code to uppercase each string, and the for_each

operator to print each string to stdout.

source_iter(vec!["Hello", "world"])

-> map(|x| x.to_uppercase()) -> for_each(|x| println!("{}", x));Flows can be assigned to variable names for convenience. E.g, the above can be rewritten as follows:

source_iter(vec!["Hello", "world"]) -> upper_print;

upper_print = map(|x| x.to_uppercase()) -> for_each(|x| println!("{}", x));Note that the order of the statements (lines) doesn't matter. In this example, upper_print is

referenced before it is assigned, and that is completely OK and better matches the flow of

data, making the program more understandable.

Operators with Multiple Ports

Some operators have more than one input port that can be referenced by ->. For example merge

merges the contents of many flows, so it can have an abitrary number of input ports. Some operators have multiple outputs, notably tee,

which has an arbitrary number of outputs.

In the syntax, we optionally distinguish input ports via an indexing prefix number

in square brackets before the name (e.g. [0]my_join and [1]my_join). We

can distinguish output ports by an indexing suffix (e.g. my_tee[0]).

Here is an example that tees one flow into two, handles each separately, and then merges them to print out the contents in both lowercase and uppercase:

my_tee = source_iter(vec!["Hello", "world"]) -> tee();

my_tee -> map(|x| x.to_uppercase()) -> my_merge;

my_tee -> map(|x| x.to_lowercase()) -> my_merge;

my_merge = merge() -> for_each(|x| println!("{}", x));merge() and tee() treat all their input/outputs the same, so we omit the indexing.

Here is a visualization of the flow that was generated:

%%{init:{'theme':'base','themeVariables':{'clusterBkg':'#ddd','clusterBorder':'#888'}}}%%

flowchart TD

classDef pullClass fill:#02f,color:#fff,stroke:#000

classDef pushClass fill:#ff0,stroke:#000

linkStyle default stroke:#aaa,stroke-width:4px,color:red,font-size:1.5em;

subgraph sg_1v1 ["sg_1v1 stratum 0"]

1v1[\"(1v1) <tt>source_iter(vec! ["Hello", "world"])</tt>"/]:::pullClass

2v1[/"(2v1) <tt>tee()</tt>"\]:::pushClass

1v1--->2v1

subgraph sg_1v1_var_my_tee ["var <tt>my_tee</tt>"]

1v1

2v1

end

end

subgraph sg_2v1 ["sg_2v1 stratum 0"]

3v1[\"(3v1) <tt>map(| x : & str | x.to_uppercase())</tt>"/]:::pullClass

4v1[\"(4v1) <tt>map(| x : & str | x.to_lowercase())</tt>"/]:::pullClass

5v1[\"(5v1) <tt>merge()</tt>"/]:::pullClass

6v1[/"(6v1) <tt>for_each(| x | println! ("{}", x))</tt>"\]:::pushClass

3v1--0--->5v1

4v1--1--->5v1

5v1--->6v1

subgraph sg_2v1_var_my_merge ["var <tt>my_merge</tt>"]

5v1

6v1

end

end

2v1--0--->7v1

2v1--1--->8v1

7v1["(7v1) <tt>handoff</tt>"]:::otherClass

7v1--->3v1

8v1["(8v1) <tt>handoff</tt>"]:::otherClass

8v1--->4v1

Hydroflow compiled this flow into two subgraphs called compiled components, connected by handoffs. You can ignore these details unless you are interested in low-level performance tuning; they are explained in the discussion of in-out trees.

A note on assigning flows with multiple ports

TODO: Need to document the port numbers for variables assigned to tree- or dag-shaped flows

The context object

Closures inside surface syntax operators have access to a special context object which provides

access to scheduling, timing, and state APIs. The object is accessible as a shared reference

(&Context) via the special name context.

Here is the full API documentation for Context.

source_iter([()])

-> for_each(|()| println!("Current tick: {}, stratum: {}", context.current_tick(), context.current_stratum()));

// Current tick: 0, stratum: 0Data Sources and Sinks in Rust

Any useful flow requires us to define sources of data, either generated computationally or received from and outside environment via I/O.

source_iter

A flow can receive data from a Rust collection object via the source_iter operator, which takes the

iterable collection as an argument and passes the items down the flow.

For example, here we iterate through a vector of usize items and push them down the flow:

source_iter(vec![0, 1]) -> ...The Hello, World example above uses this construct.

source_stream

More commonly, a flow should handle external data coming in asynchronously from a Tokio runtime.

One way to do this is with channels that allow Rust code to send data into the Hydroflow inputs.

The code below creates a channel for data of (Rust) type (usize, usize):

let (input_send, input_recv) = hydroflow::util::unbounded_channel::<(usize, usize)>();Under the hood this uses Tokio unbounded channels. Now in Rust we can now push data into the channel. E.g. for testing we can do it explicitly as follows:

input_send.send((0, 1)).unwrap()And in our Hydroflow syntax we can receive the data from the channel using the source_stream syntax and

pass it along a flow:

source_stream(input_recv) -> ...To put this together, let's revisit our Hello, World example from above with data sent in from outside the flow:

#![allow(unused)] fn main() { use hydroflow::hydroflow_syntax; let (input_send, input_recv) = hydroflow::util::unbounded_channel::<&str>(); let mut flow = hydroflow_syntax! { source_stream(input_recv) -> map(|x| x.to_uppercase()) -> for_each(|x| println!("{}", x)); }; input_send.send("Hello").unwrap(); input_send.send("World").unwrap(); flow.run_available(); }

TODO: add source_stream_serde

Hydroflow's Operators

In our previous examples we made use of some of Hydroflow's operators. Here we document each operator in more detail. Most of these operators are based on the Rust equivalents for iterators; see the Rust documentation.

anti_join

| Inputs | Syntax | Outputs | Flow |